Project planning is an aspect of Project Management, which comprises of various processes. The aim of theses processes is to ensure that various Project tasks are well coordinated and they meet the various project objectives including timely completion of the project.

What is Project Planning?

Project Planning is an aspect of Project Management that focuses a lot on Project Integration. The project plan reflects the current status of all project activities and is used to monitor and control the project.

The Project Planning tasks ensure that various elements of the Project are coordinated and therefore guide the project execution.

Project Planning helps in

- Facilitating communication

- Monitoring/measuring the project progress, and

- Provides overall documentation of assumptions/planning decisions

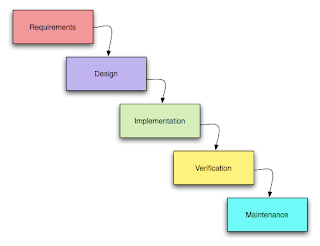

The Project Planning Phases can be broadly classified as follows:

- Development of the Project Plan

- Execution of the Project Plan

- Change Control and Corrective Actions

Project Planning is an ongoing effort throughout the Project Lifecycle.

Why is it important?

“If you fail to plan, you plan to fail.”

Project planning is crucial to the success of the Project.

Careful planning right from the beginning of the project can help to avoid costly mistakes. It provides an assurance that the project execution will accomplish its goals on schedule and within budget.

What are the steps in Project Planning?

Project Planning spans across the various aspects of the Project. Generally Project Planning is considered to be a process of estimating, scheduling and assigning the projects resources in order to deliver an end product of suitable quality. However it is much more as it can assume a very strategic role, which can determine the very success of the project. A Project Plan is one of the crucial steps in Project Planning in General!

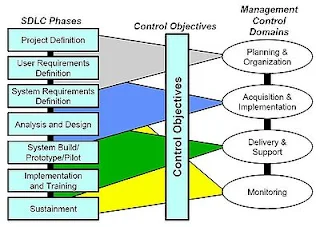

Typically Project Planning can include the following types of project Planning:

1) Project Scope Definition and Scope Planning

2) Project Activity Definition and Activity Sequencing

3) Time, Effort and Resource Estimation

4) Risk Factors Identification

5) Cost Estimation and Budgeting

6) Organizational and Resource Planning

7) Schedule Development

8) Quality Planning

9) Risk Management Planning

10) Project Plan Development and Execution

11) Performance Reporting

12) Planning Change Management

13) Project Rollout Planning

We now briefly examine each of the above steps:

1) Project Scope Definition and Scope Planning:

In this step we document the project work that would help us achieve the project goal. We document the assumptions, constraints, user expectations, Business Requirements, Technical requirements, project deliverables, project objectives and everything that defines the final product requirements. This is the foundation for a successful project completion.

2) Quality Planning:

The relevant quality standards are determined for the project. This is an important aspect of Project Planning. Based on the inputs captured in the previous steps such as the Project Scope, Requirements, deliverables, etc. various factors influencing the quality of the final product are determined. The processes required to deliver the Product as promised and as per the standards are defined.

3) Project Activity Definition and Activity Sequencing:

In this step we define all the specific activities that must be performed to deliver the product by producing the various product deliverables. The Project Activity sequencing identifies the interdependence of all the activities defined.

4) Time, Effort and Resource Estimation:

Once the Scope, Activities and Activity interdependence is clearly defined and documented, the next crucial step is to determine the effort required to complete each of the activities. See the article on “Software Cost Estimation” for more details. The Effort can be calculated using one of the many techniques available such as Function Points, Lines of Code, Complexity of Code, Benchmarks, etc.

This step clearly estimates and documents the time, effort and resource required for each activity.

5) Risk Factors Identification:

“Expecting the unexpected and facing it”

It is important to identify and document the risk factors associated with the project based on the assumptions, constraints, user expectations, specific circumstances, etc.

6) Schedule Development:

The time schedule for the project can be arrived at based on the activities, interdependence and effort required for each of them. The schedule may influence the cost estimates, the cost benefit analysis and so on.

Project Scheduling is one of the most important task of Project Planning and also the most difficult tasks. In very large projects it is possible that several teams work on developing the project. They may work on it in parallel. However their work may be interdependent.

Again various factors may impact in successfully scheduling a project

...........o Teams not directly under our control

...........o Resources with not enough experience

Popular Tools can be used for creating and reporting the schedules such as Gantt Charts

7) Cost Estimation and Budgeting:

Based on the information collected in all the previous steps it is possible to estimate the cost involved in executing and implementing the project. See the article on "Software Cost Estimation" for more details. A Cost Benefit Analysis can be arrived at for the project. Based on the Cost Estimates Budget allocation is done for the project.

8) Organizational and Resource Planning

Based on the activities identified, schedule and budget allocation resource types and resources are identified. One of the primary goals of Resource planning is to ensure that the project is run efficiently. This can only be achieved by keeping all the project resources fully utilized as possible. The success depends on the accuracy in predicting the resource demands that will be placed on the project. Resource planning is an iterative process and necessary to optimize the use of resources throughout the project life cycle thus making the project execution more efficient. There are various types of resources – Equipment, Personnel, Facilities, Money, etc.

9) Risk Management Planning:

Risk Management is a process of identifying, analyzing and responding to a risk. Based on the Risk factors Identified a Risk resolution Plan is created. The plan analyses each of the risk factors and their impact on the project. The possible responses for each of them can be planned. Throughout the lifetime of the project these risk factors are monitored and acted upon as necessary.

10) Project Plan Development and Execution:

Project Plan Development uses the inputs gathered from all the other planning processes such as Scope definition, Activity identification, Activity sequencing, Quality Management Planning, etc. A detailed Work Break down structure comprising of all the activities identified is used. The tasks are scheduled based on the inputs captured in the steps previously described. The Project Plan documents all the assumptions, activities, schedule, timelines and drives the project.

Each of the Project tasks and activities are periodically monitored. The team and the stakeholders are informed of the progress. This serves as an excellent communication mechanism. Any delays are analyzed and the project plan may be adjusted accordingly

11) Performance Reporting:

As described above the progress of each of the tasks/activities described in the Project plan is monitored. The progress is compared with the schedule and timelines documented in the Project Plan. Various techniques are used to measure and report the project performance such as EVM (Earned Value Management) A wide variety of tools can be used to report the performance of the project such as PERT Charts, GANTT charts, Logical Bar Charts, Histograms, Pie Charts, etc.

12) Planning Change Management:

Analysis of project performance can necessitate that certain aspects of the project be changed. The Requests for Changes need to be analyzed carefully and its impact on the project should be studied. Considering all these aspects the Project Plan may be modified to accommodate this request for Chan